Databricks

DataHub supports integration with Databricks ecosystem using a multitude of connectors, depending on your exact setup.

Databricks Hive

The simplest way to integrate is usually via the Hive connector. The Hive starter recipe has a section describing how to connect to your Databricks workspace.

Databricks Unity Catalog (new)

The recently introduced Unity Catalog provides a new way to govern your assets within the Databricks lakehouse. If you have enabled Unity Catalog, you can use the unity-catalog source (see below) to integrate your metadata into DataHub as an alternate to the Hive pathway.

Databricks Spark

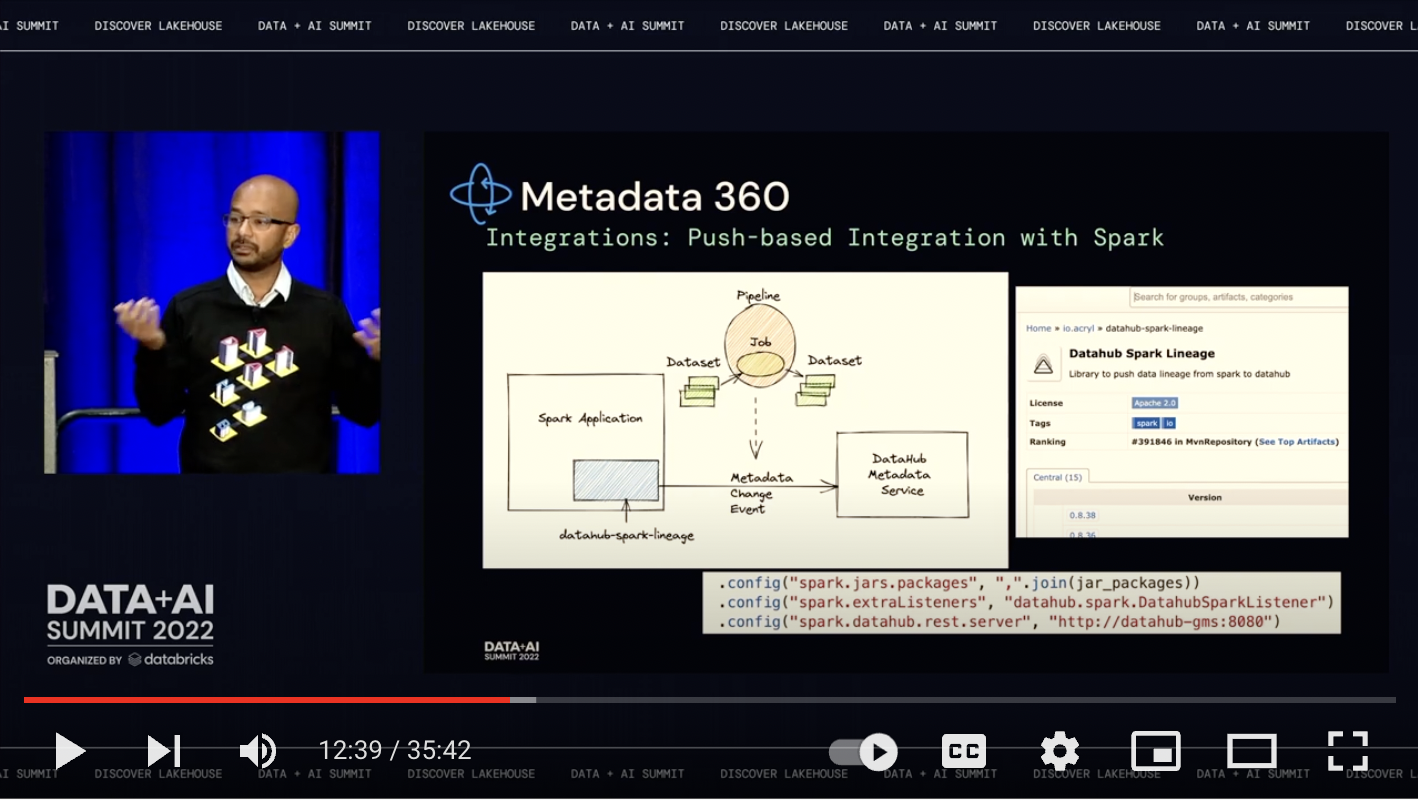

To complete the picture, we recommend adding push-based ingestion from your Spark jobs to see real-time activity and lineage between your Databricks tables and your Spark jobs. Use the Spark agent to push metadata to DataHub using the instructions here.

Watch the DataHub Talk at the Data and AI Summit 2022

For a deeper look at how to think about DataHub within and across your Databricks ecosystem, watch the recording of our talk at the Data and AI Summit 2022.

Module unity-catalog

Important Capabilities

| Capability | Status | Notes |

|---|---|---|

| Asset Containers | ✅ | Enabled by default |

| Column-level Lineage | ✅ | Enabled by default |

| Descriptions | ✅ | Enabled by default |

| Detect Deleted Entities | ✅ | Optionally enabled via stateful_ingestion.remove_stale_metadata |

| Domains | ✅ | Supported via the domain config field |

| Platform Instance | ✅ | Enabled by default |

| Schema Metadata | ✅ | Enabled by default |

| Table-Level Lineage | ✅ | Enabled by default |

This plugin extracts the following metadata from Databricks Unity Catalog:

- metastores

- schemas

- tables and column lineage

Prerequisities

- Generate a Databrick Personal Access token following the guide here: https://docs.databricks.com/dev-tools/api/latest/authentication.html#generate-a-personal-access-token

- Get your workspace Id where Unity Catalog is following: https://docs.databricks.com/workspace/workspace-details.html#workspace-instance-names-urls-and-ids

- Check the starter recipe below and replace Token and Workspace Id with the ones above.

CLI based Ingestion

Install the Plugin

pip install 'acryl-datahub[unity-catalog]'

Starter Recipe

Check out the following recipe to get started with ingestion! See below for full configuration options.

For general pointers on writing and running a recipe, see our main recipe guide.

source:

type: unity-catalog

config:

workspace_url: https://my-workspace.cloud.databricks.com

token: "mygenerated_databricks_token"

#metastore_id_pattern:

# deny:

# - 11111-2222-33333-44-555555

#catalog_pattern:

# allow:

# - my-catalog

#schema_pattern:

# deny:

# - information_schema

#table_pattern:

# allow:

# - test.lineagedemo.dinner

# First you have to create domains on Datahub by following this guide -> https://datahubproject.io/docs/domains/#domains-setup-prerequisites-and-permissions

#domain:

# urn:li:domain:1111-222-333-444-555:

# allow:

# - main.*

stateful_ingestion:

enabled: true

pipeline_name: acme-corp-unity

# sink configs if needed

Config Details

- Options

- Schema

Note that a . is used to denote nested fields in the YAML recipe.

View All Configuration Options

| Field [Required] | Type | Description | Default | Notes |

|---|---|---|---|---|

| include_column_lineage [✅] | boolean | Option to enable/disable lineage generation. Currently we have to call a rest call per column to get column level lineage due to the Databrick api which can slow down ingestion. | True | |

| include_table_lineage [✅] | boolean | Option to enable/disable lineage generation. | True | |

| platform_instance [✅] | string | The instance of the platform that all assets produced by this recipe belong to | None | |

| token [✅] | string | Databricks personal access token | None | |

| workspace_name [✅] | string | Name of the workspace. Default to deployment name present in workspace_url | None | |

| workspace_url [✅] | string | Databricks workspace url | None | |

| env [✅] | string | The environment that all assets produced by this connector belong to | PROD | |

| catalog_pattern [✅] | AllowDenyPattern | Regex patterns for catalogs to filter in ingestion. Specify regex to match the catalog name | {'allow': ['.*'], 'deny': [], 'ignoreCase': True} | |

| catalog_pattern.allow [❓ (required if catalog_pattern is set)] | array(string) | None | ||

| catalog_pattern.deny [❓ (required if catalog_pattern is set)] | array(string) | None | ||

| catalog_pattern.ignoreCase [❓ (required if catalog_pattern is set)] | boolean | Whether to ignore case sensitivity during pattern matching. | True | |

| domain [✅] | map(str,AllowDenyPattern) | A class to store allow deny regexes | None | |

domain.key.allow [❓ (required if domain is set)] | array(string) | None | ||

domain.key.deny [❓ (required if domain is set)] | array(string) | None | ||

domain.key.ignoreCase [❓ (required if domain is set)] | boolean | Whether to ignore case sensitivity during pattern matching. | True | |

| metastore_id_pattern [✅] | AllowDenyPattern | Regex patterns for metastore id to filter in ingestion. | {'allow': ['.*'], 'deny': [], 'ignoreCase': True} | |

| metastore_id_pattern.allow [❓ (required if metastore_id_pattern is set)] | array(string) | None | ||

| metastore_id_pattern.deny [❓ (required if metastore_id_pattern is set)] | array(string) | None | ||

| metastore_id_pattern.ignoreCase [❓ (required if metastore_id_pattern is set)] | boolean | Whether to ignore case sensitivity during pattern matching. | True | |

| schema_pattern [✅] | AllowDenyPattern | Regex patterns for schemas to filter in ingestion. Specify regex to only match the schema name. e.g. to match all tables in schema analytics, use the regex 'analytics' | {'allow': ['.*'], 'deny': [], 'ignoreCase': True} | |

| schema_pattern.allow [❓ (required if schema_pattern is set)] | array(string) | None | ||

| schema_pattern.deny [❓ (required if schema_pattern is set)] | array(string) | None | ||

| schema_pattern.ignoreCase [❓ (required if schema_pattern is set)] | boolean | Whether to ignore case sensitivity during pattern matching. | True | |

| table_pattern [✅] | AllowDenyPattern | Regex patterns for tables to filter in ingestion. Specify regex to match the entire table name in catalog.schema.table format. e.g. to match all tables starting with customer in Customer catalog and public schema, use the regex 'Customer.public.customer.*' | {'allow': ['.*'], 'deny': [], 'ignoreCase': True} | |

| table_pattern.allow [❓ (required if table_pattern is set)] | array(string) | None | ||

| table_pattern.deny [❓ (required if table_pattern is set)] | array(string) | None | ||

| table_pattern.ignoreCase [❓ (required if table_pattern is set)] | boolean | Whether to ignore case sensitivity during pattern matching. | True | |

| stateful_ingestion [✅] | StatefulStaleMetadataRemovalConfig | Unity Catalog Stateful Ingestion Config. | None | |

| stateful_ingestion.enabled [❓ (required if stateful_ingestion is set)] | boolean | The type of the ingestion state provider registered with datahub. | None | |

| stateful_ingestion.ignore_new_state [❓ (required if stateful_ingestion is set)] | boolean | If set to True, ignores the current checkpoint state. | None | |

| stateful_ingestion.ignore_old_state [❓ (required if stateful_ingestion is set)] | boolean | If set to True, ignores the previous checkpoint state. | None | |

| stateful_ingestion.remove_stale_metadata [❓ (required if stateful_ingestion is set)] | boolean | Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled. | True |

The JSONSchema for this configuration is inlined below.

{

"title": "UnityCatalogSourceConfig",

"description": "Base configuration class for stateful ingestion for source configs to inherit from.",

"type": "object",

"properties": {

"env": {

"title": "Env",

"description": "The environment that all assets produced by this connector belong to",

"default": "PROD",

"type": "string"

},

"platform_instance": {

"title": "Platform Instance",

"description": "The instance of the platform that all assets produced by this recipe belong to",

"type": "string"

},

"stateful_ingestion": {

"title": "Stateful Ingestion",

"description": "Unity Catalog Stateful Ingestion Config.",

"allOf": [

{

"$ref": "#/definitions/StatefulStaleMetadataRemovalConfig"

}

]

},

"token": {

"title": "Token",

"description": "Databricks personal access token",

"type": "string"

},

"workspace_url": {

"title": "Workspace Url",

"description": "Databricks workspace url",

"type": "string"

},

"workspace_name": {

"title": "Workspace Name",

"description": "Name of the workspace. Default to deployment name present in workspace_url",

"type": "string"

},

"metastore_id_pattern": {

"title": "Metastore Id Pattern",

"description": "Regex patterns for metastore id to filter in ingestion.",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"catalog_pattern": {

"title": "Catalog Pattern",

"description": "Regex patterns for catalogs to filter in ingestion. Specify regex to match the catalog name",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"schema_pattern": {

"title": "Schema Pattern",

"description": "Regex patterns for schemas to filter in ingestion. Specify regex to only match the schema name. e.g. to match all tables in schema analytics, use the regex 'analytics'",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"table_pattern": {

"title": "Table Pattern",

"description": "Regex patterns for tables to filter in ingestion. Specify regex to match the entire table name in catalog.schema.table format. e.g. to match all tables starting with customer in Customer catalog and public schema, use the regex 'Customer.public.customer.*'",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"domain": {

"title": "Domain",

"description": "Attach domains to catalogs, schemas or tables during ingestion using regex patterns. Domain key can be a guid like *urn:li:domain:ec428203-ce86-4db3-985d-5a8ee6df32ba* or a string like \"Marketing\".) If you provide strings, then datahub will attempt to resolve this name to a guid, and will error out if this fails. There can be multiple domain keys specified.",

"default": {},

"type": "object",

"additionalProperties": {

"$ref": "#/definitions/AllowDenyPattern"

}

},

"include_table_lineage": {

"title": "Include Table Lineage",

"description": "Option to enable/disable lineage generation.",

"default": true,

"type": "boolean"

},

"include_column_lineage": {

"title": "Include Column Lineage",

"description": "Option to enable/disable lineage generation. Currently we have to call a rest call per column to get column level lineage due to the Databrick api which can slow down ingestion. ",

"default": true,

"type": "boolean"

}

},

"required": [

"token",

"workspace_url"

],

"additionalProperties": false,

"definitions": {

"DynamicTypedStateProviderConfig": {

"title": "DynamicTypedStateProviderConfig",

"type": "object",

"properties": {

"type": {

"title": "Type",

"description": "The type of the state provider to use. For DataHub use `datahub`",

"type": "string"

},

"config": {

"title": "Config",

"description": "The configuration required for initializing the state provider. Default: The datahub_api config if set at pipeline level. Otherwise, the default DatahubClientConfig. See the defaults (https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/src/datahub/ingestion/graph/client.py#L19)."

}

},

"required": [

"type"

],

"additionalProperties": false

},

"StatefulStaleMetadataRemovalConfig": {

"title": "StatefulStaleMetadataRemovalConfig",

"description": "Base specialized config for Stateful Ingestion with stale metadata removal capability.",

"type": "object",

"properties": {

"enabled": {

"title": "Enabled",

"description": "The type of the ingestion state provider registered with datahub.",

"default": false,

"type": "boolean"

},

"ignore_old_state": {

"title": "Ignore Old State",

"description": "If set to True, ignores the previous checkpoint state.",

"default": false,

"type": "boolean"

},

"ignore_new_state": {

"title": "Ignore New State",

"description": "If set to True, ignores the current checkpoint state.",

"default": false,

"type": "boolean"

},

"remove_stale_metadata": {

"title": "Remove Stale Metadata",

"description": "Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

},

"AllowDenyPattern": {

"title": "AllowDenyPattern",

"description": "A class to store allow deny regexes",

"type": "object",

"properties": {

"allow": {

"title": "Allow",

"description": "List of regex patterns to include in ingestion",

"default": [

".*"

],

"type": "array",

"items": {

"type": "string"

}

},

"deny": {

"title": "Deny",

"description": "List of regex patterns to exclude from ingestion.",

"default": [],

"type": "array",

"items": {

"type": "string"

}

},

"ignoreCase": {

"title": "Ignorecase",

"description": "Whether to ignore case sensitivity during pattern matching.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

}

}

}

Troubleshooting

No data lineage captured or missing lineage

Check that you meet the Unity Catalog lineage requirements.

Also check the Unity Catalog limitations to make sure that lineage would be expected to exist in this case.

Lineage extraction is too slow

Currently, there is no way to get table or column lineage in bulk from the Databricks Unity Catalog REST api. Table lineage calls require one API call per table, and column lineage calls require one API call per column. If you find metadata extraction taking too long, you can turn off column level lineage extraction via the include_column_lineage config flag.

Code Coordinates

- Class Name:

datahub.ingestion.source.unity.source.UnityCatalogSource - Browse on GitHub

Questions

If you've got any questions on configuring ingestion for Databricks, feel free to ping us on our Slack